IBM crossed a transformer with an SSM and got ‘Bamba’

In collaboration with CMU, Princeton, and University of Illinois, IBM Research built an open-source LLM that combines the expressive power of a transformer with the runtime speed of a state-space model. Key features will soon be added to IBM Granite 4.0.

The transformer architecture behind today’s large language models has shown an uncanny ability to generate human-like text. Part of its effectiveness comes from its self-attention mechanism, which allows the model to weigh all the words in an input sequence when generating a response.

The problem comes as conversations get longer. Because the model holds the running sequence in memory as it responds, the cumulative cost of generation grows quadratically. If the size of the context window doubles, the cost of processing the context and generating a response doesn’t just double — it quadruples.

This “quadratic bottleneck” is often behind that frustrating lag between asking the model a question and getting an answer. It also creates a lot of redundant computing. By the time ChatGPT popularized the transformer in 2022, researchers were already searching for alternative architectures.

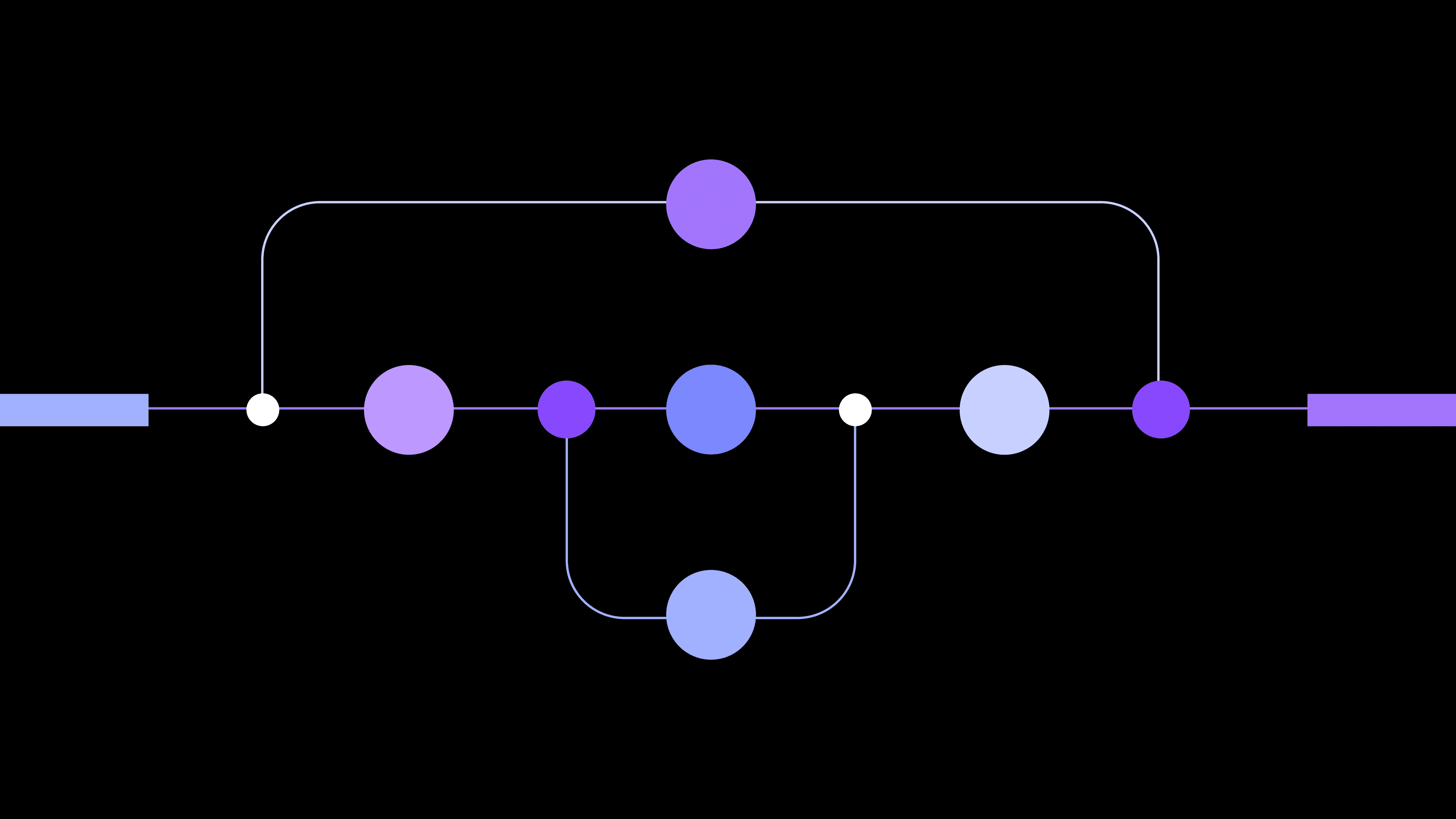

State-space models (SSMs), and transformers interleaved with SSM layers, have emerged as two possible solutions. IBM Research has just open-sourced its first hybrid experiment: Bamba, a model that can run as quickly as an SSM and process long sequences as skillfully as a transformer. Many of Bamba’s innovations are part of IBM’s next-generation Granite 4.0 models coming in several months.

By significantly reducing the memory requirements of the transformer’s KV (key value) cache memory, Bamba-9B has shown it can run at least twice as fast as transformers of similar size while matching their accuracy. “Everything comes back to the KV cache reduction,” says Raghu Ganti, the IBM researcher leading the project. “More throughput, lower latency, longer context length.”

Watch this video on YouTube - Introducing Bamba, IBM's newest LLM.

The most important model you’ve never heard of

State-space models come nowhere close to matching the name recognition of transformers, but they’ve been used for decades to model dynamic systems.

“They are the bread and butter of electrical engineering — signal processing, robotics, and control theory,” says Ankit Gupta, an IBM researcher who has played a key role in adapting SSMs to deep learning. “Any field that uses time-series data would use state-space models to analyze it.”

The mathematical equations SSMs are built on can be applied to electrical activity in the brain, the weather, and even the stock market. From a series of observations, an SSM calculates a “hidden state” of fixed size, capturing the essential properties of the system. Think of the state as a summary of the past. When new data comes in, the hidden state gets updated, without increasing its size, along with predictions of what will happen next.

SSMs crossed over to neural networks in 2021, when Albert Gu and his collaborators at Stanford released S4, an SSM that applied state variables to language. Like the transformer, and recurrent neural network (RNNs), before it, the SSM was good at processing sequences of words. But it could process long sequences more skillfully than RNNs and much faster than transformers.

While a transformer attends to all the words in the context window when outputting a response, an SSM maintains a compressed hidden state that summarizes past information. This selective retention of information requires less memory overhead and leads to faster inference speeds.

S4 made a splash when it suddenly appeared on Long Range Arena, a benchmark for comparing language models by their ability to handle long sequences, but it was difficult to implement. Then an AI resident at IBM, Gupta helped Gu and team simplify the model using diagonal state spaces. Their “diagonal” SSM shrank S4’s 1,000 lines of code to 10. Gupta later helped to introduce a gating mechanism for filtering out irrelevant information, allowing SSMs for the first time to match the “expressivity,” or sequence-modeling skill, of transformers.

That team also unveiled what may be the first hybrid transformer. “Exploring hybrids made sense,” said Gupta, who now works on IBM’s Granite Vision models. “We could use standard attention blocks to handle text with local dependencies while leveraging SSMs to do longer-range contextualization.”

In 2023, Gu, then a professor at CMU, and Tri Dao, at Princeton, unveiled a gated SSM variant — Mamba2, which helped to inspire a wave of hybrids, with names like Samba and MambaFormer. Last year, Nvidia confirmed that these new hybrids could outperform either architecture on their own while dramatically speeding up inferencing, culminating in their release of Nemotron-H.

Overcoming the KV cache bottleneck

From the start, IBM Research has made efficiency the cornerstone of its Granite LLMs for enterprise. As IBM Granite has become smaller and more capable, researchers aimed their sights on the quadratic bottleneck. When Nvidia’s results came out, IBM researchers validated them internally and moved forward with building their own hybrid — Bamba-9B.

They sought out Mamba’s creators, Gu and Dao, along with Minjia Zhang, a professor at the University of Illinois at Urbana-Champaign. Together, they selected Nvidia’s Mamba2 architecture and chose to make just about everything associated with Bamba open-source — the training recipes, the data, the data loader IBM designed for largescale distributed training, and a quantization framework aimed at shaving storage and inferencing costs.

They initially trained Bamba on 2 trillion tokens (words and parts of words). Encouraged by the results, they added another trillion tokens and shrank the model from 18 GB to 9 GB through quantization, reducing its bit width from Mamba2’s 16-bit floating-point precision to 8-bits. On key benchmarks, Bamba has performed on par with Meta’s Llama-3.1 8B model, which was trained on seven times more data — an achievement Ganti attributes to Bamba’s design and high-quality training data.

Their next hurdle was optimizing vLLM to run SSMs. “Virtual” LLM has emerged as the go-to open-source inference server for LLMs, and the team behind Bamba worked closely with Red Hat to integrate the model into the platform. “SSMs are difficult to support, because you need bespoke state management,” said Tyler Smith, a technical staff member at Red Hat and a vLLM committer.

When Bamba was released late last year, Ganti invited the open community to help improve it. “Let’s overcome the KV-cache bottleneck together!” he wrote in Bamba’s intro on Hugging Face.

Trained on 4,000-token sequences, Bamba can capably handle 32,000-token conversations. But Ganti said he thinks it can go to 1 million tokens or more, and run up to five times faster than a transformer as vLLM incorporates more support for SSMs.

The refrain of La Bamba, the Mexican folk song that Ritchie Valens made famous, goes: Para bailar La Bamba/Se necesita una poca de Gracia. All you need to dance the beat is a little grace.

The same could be said for beating the transformer’s quadratic bottleneck.

Related posts

- Technical noteJeffrey Burns

How IBM Granite became a leader in responsible AI

ExplainerKim MartineauAI for seeing the forest — and the trees

NewsKim MartineauMeet the IBM researchers trying to raise AI’s intelligence-per-watt ratio

Q & AKim Martineau